Adapting Your Messages Array for Any LLM API: A Developer’s Guide

You’ve got a Messages Array — a tidy JSON package of “system,” “user,” and “assistant” roles — set to query an AI like OpenAI’s GPT-4o. It runs perfectly there. Then Claude demands a separate system field, LLaMA craves a tagged string, Gemini shifts the labels, xAI’s Grok 3 sticks to OpenAI’s format but trims the fat, and DeepSeek adds its own subtle twist. Adapting that array across large language model (LLM) APIs — GPT-4o, Claude, LLaMA, Gemini, Grok 3, or DeepSeek — isn’t a straight swap. Each API bends roles and structure its own way, and those quirks can tangle your code. With a clear approach, though, you can tweak it to fit anywhere.

The Messages Array, born from OpenAI, seems like it should slip into any API slot. But between Claude’s system split, Grok 3’s lean replies, and the rest, every model tweaks the fit. I’ll guide you through adapting your array, map it to major APIs with examples, and share tools to lighten the load. We’ll kick off with a dishwashing prompt and wrap with a two-step chain-of-thought (CoT) to tie it together.

The Problem: Messages Arrays Vary by API

A Messages Array relies on roles — typically “system” (instructions), “user” (input), and “assistant” (response). Here’s a simple one:

[

{"role": "system", "content": "You’re a friendly family counselor."},

{"role": "user", "content": "Who should wash the dishes?"}

]It’s golden for OpenAI. But across APIs, it shifts:

- OpenAI (GPT-4o): Takes it straight.

- Claude: Splits “system” out; messages is for “user” and “assistant.”

- LLaMA: Raw setups mash it into <|system|> tags; hosted ones mimic OpenAI.

- Gemini: “System” becomes prompt setup, “user” a query.

- DeepSeek: OpenAI-like but with optional JSON tweaks.

- Grok 3 (xAI): Uses OpenAI-style roles — system, user, assistant — via a compatible API, and leans hard into concise, insight-driven responses, reflecting xAI’s push for practical, truth-seeking AI.

These aren’t just formatting hiccups — extra roles like tool calls or memory handling differ too. Your array needs to stretch for each API’s take.

Why It’s Complicated: Role Differences Across LLMs

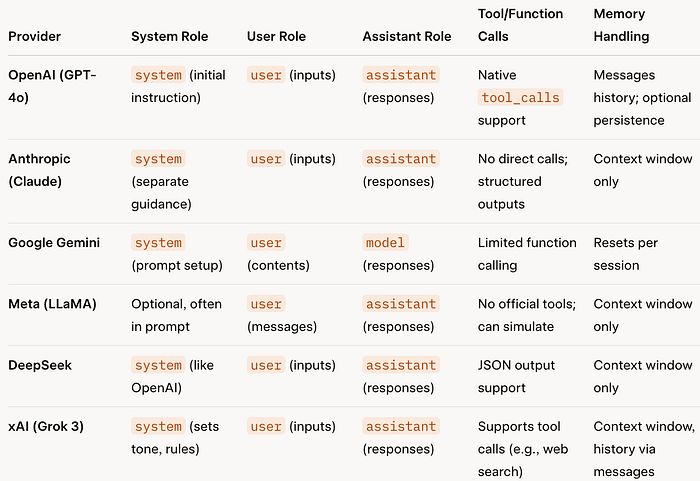

LLM APIs vary because providers chase different goals. Here’s how major models handle roles:

Notes:

- System Role: Most models (OpenAI, Claude, Gemini, DeepSeek, Grok 3) define a distinct system role; LLaMA blends it into the prompt unless wrapped.

- Tools: OpenAI and Grok 3 lead with native tool calls (e.g., Grok’s web search); Gemini’s is limited; Claude, LLaMA, and DeepSeek rely on text-based workarounds, with DeepSeek offering JSON output.

- Memory: OpenAI alone persists beyond sessions; others (Claude, LLaMA, Gemini, DeepSeek, Grok 3) use context windows — Grok 3 and OpenAI leverage messages for history.

These differences — Grok 3’s tool integration or Claude’s system split — mean your Messages Array needs tailoring to keep it rolling across APIs.

Adapting the Messages Array: How-To and Examples

Let’s adapt our dishwashing array:

1. OpenAI (GPT-4o)

- Format: messages with all roles.

- Adaptation: None.

- Example:

{

"model": "gpt-4o",

"messages": [

{"role": "system", "content": "You’re a friendly family counselor."},

{"role": "user", "content": "Who should wash the dishes?"}

],

"max_tokens": 150

}2. Anthropic (Claude)

- Format: system separate; messages for “user”/“assistant.”

- Adaptation: Pull “system” out.

- Example:

{

"model": "claude-3-opus-20240229",

"system": "You’re a friendly family counselor.",

"messages": [

{"role": "user", "content": "Who should wash the dishes?"}

],

"max_tokens": 200

}3. Meta (LLaMA)

- Format: Raw: string with tags; Hosted: OpenAI-style.

- Adaptation: Concatenate or keep as-is.

- Example:

{

"inputs": "<|system|>You’re a friendly family counselor.<!|user|>Who should wash the dishes?",

"parameters": {"max_new_tokens": 150}

}4. Google Gemini

- Format: system as prompt setup; “user” as queries.

- Adaptation: Remap roles.

- Example:

{

"prompt": {

"system": "You’re a friendly family counselor.",

"queries": [

{"role": "user", "content": "Who should wash the dishes?"}

]

},

"max_output_tokens": 150

}5. DeepSeek

- Format: OpenAI-compatible messages.

- Adaptation: Minimal.

- Example:

{

"model": "deepseek-chat",

"messages": [

{"role": "system", "content": "You’re a friendly family counselor."},

{"role": "user", "content": "Who should wash the dishes?"}

],

"max_tokens": 150

}6. xAI (Grok 3)

- Format: Likely messages with “system,” “user,” “assistant” (assumed OpenAI-like, per xAI’s practical bent).

- Adaptation: Minimal, possibly with concise-output emphasis.

- Example:

{

"model": "grok-3",

"messages": [

{"role": "system", "content": "You’re a friendly family counselor. Keep answers clear and concise."},

{"role": "user", "content": "Who should wash the dishes?"}

],

"max_tokens": 150

}Steps:

- Check API docs for schema.

- Remap roles — “system” might split or tag.

- Adjust structure — arrays or strings.

- Test it out.

Tools to Simplify Adaptation

Adapting a Messages Array across LLM APIs — like GPT-4o, Claude, LLaMA, Gemini, DeepSeek, or Grok 3 — can feel like juggling syntax quirks. No single button fixes it all, but these tools can cut the hassle, reshaping your JSON to fit each model’s demands. Here’s how to streamline the process:

1. Custom Python Script

- What It Does: A lightweight function transforms your Messages Array into API-specific formats — keeping you in control without the grunt work.

- How It Works: Map roles and structures based on the target API, from OpenAI’s messages to LLaMA’s tagged strings.

- Example:

def adapt_messages(messages, target_api):

if target_api in ["openai", "deepseek", "grok3"]:

return {"messages": messages} # Grok 3 aligns with OpenAI-style (assumed)

elif target_api == "claude":

system = next((m["content"] for m in messages if m["role"] == "system"), "")

user_msgs = [m for m in messages if m["role"] != "system"]

return {"system": system, "messages": user_msgs}

elif target_api == "llama_raw":

return {"inputs": "".join(f"<|{m['role']}|>{m['content']}" for m in messages))

elif target_api == "gemini":

system = next((m["content"] for m in messages if m["role"] == "system"), "")

queries = [m for m in messages if m["role"] != "system"]

return {"prompt": {"system": system, "queries": queries}}

else:

raise ValueError("Unsupported API")- Why Use It: Pair it with requests to hit endpoints — flexible, fast, and yours to tweak (e.g., add Grok 3’s quirks as they emerge).

2. LangChain Framework

- What It Does: Wraps your array in a template, smoothing over API differences so you focus on logic, not formatting.

- How It Works: Converts your messages into a unified prompt, then pipes it to models like GPT-4o or Claude (Grok 3 support could follow with xAI integration).

- Example:

from langchain.prompts import ChatPromptTemplate

from langchain.chat_models import ChatOpenAI, ChatAnthropic

messages = [

{"role": "system", "content": "You’re a friendly family counselor."},

{"role": "user", "content": "Who should wash the dishes?"}

]

template = ChatPromptTemplate.from_messages(messages)

chain = template | ChatOpenAI(model="gpt-4o") # Swap for ChatAnthropic or future Grok 3

response = chain.invoke({})- Why Use It: Saves time on syntax; scalable if you juggle multiple LLMs.

3. API Wrappers

API wrappers are tools or frameworks that standardize the interface of an underlying API, making it easier to interact with diverse systems — like LLMs — in a uniform way. For adapting a Messages Array across models such as OpenAI’s GPT-4o, Anthropic’s Claude, Meta’s LLaMA, Google’s Gemini, DeepSeek, or xAI’s Grok 3, wrappers act like a translator, smoothing over each API’s quirks. Here’s a deeper dive:

- How They Work: Wrappers take a model’s native API (which might use odd formats, like LLaMA’s raw string inputs) and expose it through a familiar interface — often OpenAI’s messages array style. They’re typically server-side solutions that host the model and handle the reformatting internally. For example:

- You send a standard JSON request (e.g., {“messages”: […]}).

- The wrapper converts it to the model’s required input (e.g., <|system|>…<!|user|> for LLaMA) and passes it along.

- The response is reformatted back into a consistent structure.

Examples of Wrappers:

- vLLM: An open-source serving platform that hosts models like LLaMA, offering an OpenAI-compatible endpoint. You deploy your model, and it accepts messages arrays regardless of the model’s native format.

- LM Studio: A user-friendly tool for running LLMs locally, with an OpenAI-like API option. Load Grok 3 or LLaMA, and it mimics GPT-4o’s interface.

- Llama.cpp: A lightweight framework for running LLaMA models, which can be paired with an OpenAI-style server (e.g., via llama-cpp-python).

Benefits:

- Uniformity: No need to rewrite your Messages Array for each API — just use one format (e.g., OpenAI’s).

- Scalability: Test or deploy multiple models (even Grok 3, if supported) with the same codebase.

- Ease: Ideal for developers who want to skip manual adaptation, especially for open-source models like LLaMA.

Limitations:

- Setup Cost: Requires hosting — either locally or on a server — which takes more effort than a Python script.

- Model Support: Not all wrappers support every LLM (e.g., Grok 3’s availability depends on xAI’s releases).

- Overhead: Adds a layer of abstraction, which might slow things down or hide model-specific features (like Claude’s structured outputs).

Use Case Example:

- Say you’re testing Grok 3 and LLaMA. Without a wrapper, you’d tweak your array for Grok 3’s messages and LLaMA’s <|tags|>. With vLLM, you deploy both, send the same OpenAI-style JSON, and let the wrapper handle the rest — saving time and sanity.

Wrappers shine when you’re juggling multiple models or building a production system, but they’re overkill for quick one-offs compared to a Python script.

Example:

{

"model": "grok-3",

"messages": [

{"role": "system", "content": "You’re a friendly family counselor."},

{"role": "user", "content": "Who should wash the dishes?"}

]

}Why Use It: Cuts adaptation entirely for supported models — ideal for testing or production.

Tools Summary: Picking the Right Fix for Your Messages Array

Adapting a Messages Array across LLM APIs — like GPT-4o, Claude, LLaMA, Gemini, DeepSeek, or Grok 3 — doesn’t have to be a slog. Three tools stand out to ease the pain: a custom Python script, the LangChain framework, and API wrappers. Each has its sweet spot:

- Custom Python Script: The DIY champ — flexible and lightweight. It’s perfect for hands-on devs who want a quick, tailored solution. With a few lines, you can morph your array for OpenAI, Grok 3, or LLaMA’s raw tags. Pair it with requests, and you’re hitting endpoints in no time. Best for small projects or when you need full control.

- LangChain Framework: The team player — ideal for scaling up. It wraps your array in a template, smoothing over API quirks so you can swap GPT-4o for Claude (or Grok 3, eventually) with minimal fuss. It’s a bit heavier to set up, but it pays off for complex apps or multi-model testing.

- API Wrappers: The set-it-and-forget-it option. Tools like vLLM or LM Studio host models (e.g., LLaMA, potentially Grok 3) and serve an OpenAI-like interface — your array works as-is. They’re great for consistency across models or production use, though they require hosting effort upfront.

Recommendation: Start with a Python script for speed and simplicity — it’s versatile enough for most needs, including Grok 3’s quirks as they emerge. If your project grows or demands chaining (like a CoT pipeline), lean on LangChain. Reserve API wrappers for when you’re standardizing multiple models long-term — especially if Grok 3 or LLaMA are in play. Together, these tools turn a portability headache into a solved problem, letting you focus on what your AI can do, not how to talk to it.

A CoT Example: Chaining JSON Steps

For apps needing step-by-step reasoning, chaining JSON outputs — like analyzing a dishwashing situation then proposing a plan — shows off a two-step chain-of-thought (CoT) process. Here’s how it works with xAI’s Grok 3, using message history to link steps, with notes on adaptation:

- Step 1: Analyze the situation.

{

"model": "grok-3",

"messages": [

{"role": "system", "content": "You’re a friendly family counselor. Return answers in JSON."},

{"role": "user", "content": "Step 1: Analyze a couple’s dishwashing situation (schedules, preferences). Return a JSON summary."}

],

"max_tokens": 150

}Output:

{

"step": 1,

"analysis": {

"schedules": "Partner A works late, Partner B is home earlier.",

"preferences": "Partner A hates dishes, Partner B doesn’t mind."

}

}- Step 2: Propose a Plan (Using History)

{

"model": "grok-3",

"messages": [

{"role": "system", "content": "You’re a friendly family counselor. Return answers in JSON."},

{"role": "user", "content": "Step 1: Analyze a couple’s dishwashing situation (schedules, preferences). Return a JSON summary."},

{"role": "assistant", "content": "{\"step\": 1, \"analysis\": {\"schedules\": \"Partner A works late, Partner B is home earlier.\", \"preferences\": \"Partner A hates dishes, Partner B doesn’t mind.\"}}"},

{"role": "user", "content": "Step 2: Using the prior analysis, propose a fair dishwashing plan."}

],

"max_tokens": 150

}{

"step": 2,

"solution": "Partner B washes dishes on weekdays when Partner A is late; they alternate weekends."

}Adaptation Notes: Grok 3 and OpenAI carry history via the messages array — Step 1’s assistant response flows into Step 2’s context naturally within the window (100k+ tokens for Grok 3). Claude needs the system split and might not parse JSON history without text wrapping; Gemini resets per session, so you’d manually reinsert the output; LLaMA’s raw format scrambles unless hosted. For portability, keep Step 1’s JSON in the user prompt as a fallback if history handling diverges.

Wrapping Up

Adapting a Messages Array across LLM APIs — like GPT-4o, Claude, LLaMA, Gemini, DeepSeek, or Grok 3 — means navigating role quirks and format shifts. Map it manually, script it, or use LangChain. From quick prompts to CoT pipelines, you’ll unlock the flexibility to talk to any model, including xAI’s Grok 3, with ease.